TL;DR:AI, when used by developers, can assist code writing with autocomplete, provide helpful information for diagnosing error messages and technical problems, generate code such as bash scripts and unit tests, summarise technical conversations and provide helpful summaries for code reviews.

Over the last couple of years, I've sprinkled AI usage through various typical software engineering activities.

The most common tools (and what I tend to use) have been Copilot and Copilot Chat, but some free tools such as Llama Coder are beginning to become more mainstream.

In this article I'll summarise the scenarios where I found AI useful.

- Writing code faster with autocomplete

- Getting help with error messages

- Getting help with technical problems

- Writing bash scripts

- Generating unit test code

- Summarising conversations

- Code reviews

- General thoughts

Writing code faster with autocomplete#

AI-powered autosuggest was a great help when I had to write a chunk of code that's necessarily verbose, but not unique to my problem.

A perfect example is given on Copilot extention homepage.

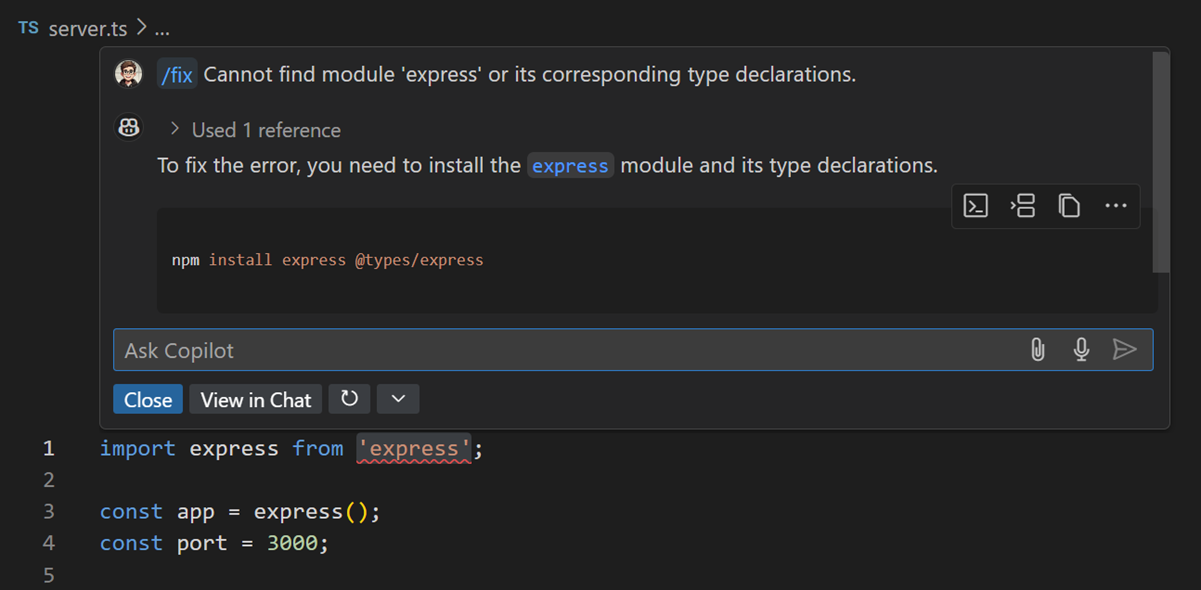

Getting help with error messages#

Pasting an error message into Copilot Chat, prefixed with /fix, can provide good guidance on the cause and fix for a given error.

When the responses given aren't suitable, follow-up prompts such as "can you suggest some other alternatives" can help.

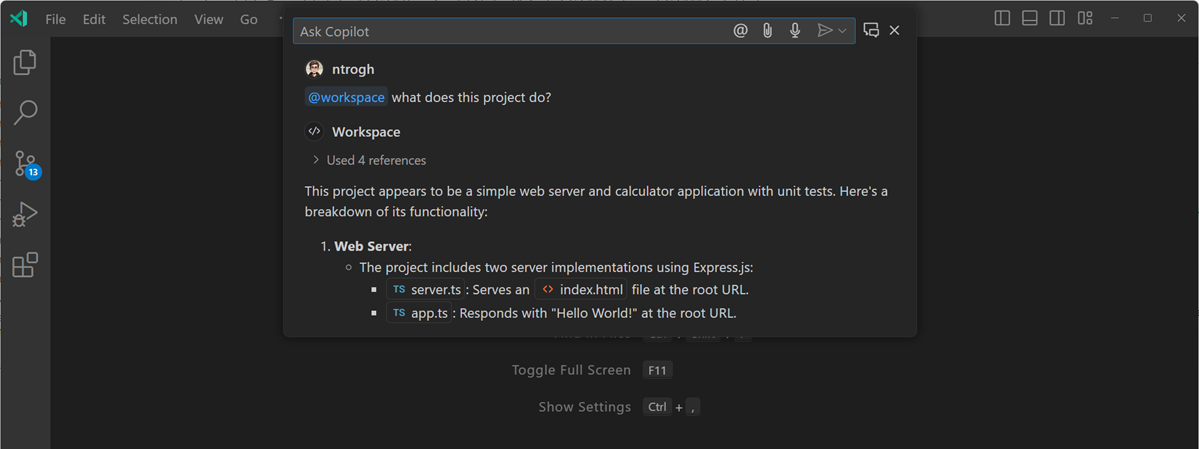

Getting help with technical problems#

Similar to error messages, I found that expressing a technical problem in an AI-powered chat can yield some good ideas and solutions.

This worked even better when I followed up the initial question with clarifications and/or challenged the AI to think of additional ideas or alternatives.

Writing bash scripts#

Bash scripts can perform many kinds of general tasks. Once you have a script that does what you want, you can re-use it any number of times.

A specific need I had was to see all the authors who had edited a particular file in a Git repository. With the help of ChatGPT I was able to write a script that achieved what I wanted.

Here's the prompt I used:

Please write a bash script which finds and lists all the unique authors who have edited the given file in the current Git repository. The given file will be specified as the first parameter to the bash script.

I've published the resulting script as a Github Gist: list_authors.sh.

The script takes as an argument the path to a file in that repo. It then uses git log on the current repository to find all the authors who have edited the file, sorts and uniqs them and outputs them to stdout.

Here's a sample of the output from running it on a file in the NextJS repository:

$ list_authors packages/next/src/shared/lib/head.tsx

Authors who have edited 'packages/next/src/shared/lib/head.tsx':

Adam Stankiewicz <sheerun@sher.pl>

Filipe Medeiros <filipesilvamedeiros@gmail.com>

Gerald Monaco <gerald@gmonaco.me>

JJ Kasper <jj@jjsweb.site>

...Generating unit test code#

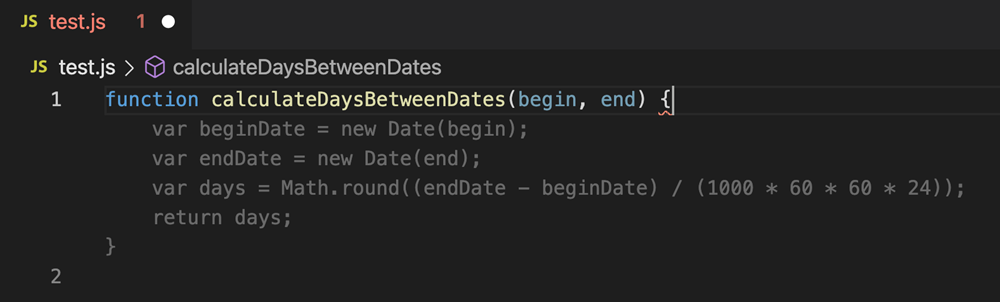

I sometimes use GitHub Copilot to generate unit tests for a particular code file I am working on.

This can be done by opening the file under test, pressing Cmd+I and asking Copilot to write a unit test for the current file.

I would use a prompt like: "Please write a unit test for this file".

I use this is mainly to set up the scaffolding, such as describe and it blocks and basic assertions. Usually I need to tidy up a few things here and there, but the AI is surprisingly good at generating decent test code.

Another technique is to provide just the code for one function or code block and ask AI to generate test code for it, listing a few test cases.

Here's an example of a prompt I might use:

Please write a Jest unit test for a Typescript function.

The unit test should have the following it blocks:

- returns a list of dates between the two dates passed

- returns an empty array if two identical dates are passed

- throws an error if null is passed

Please write tests that satisfy the above it blocks.

Here is the Typescript function:

```

function calculateDaysBetweenDates (begin, end) {

var beginDate = new Date (begin) ;

var endDate = new Date(end) ;

var days = Math. round (endDate - beginDate) / (1000 * 60 * 60 * 24) ) ;

return days;

}

```

Summarising conversations#

Some colleagues and I had a long back-and-forth about code reviews in a Github discussion.

When it came time to summarise all these in a meeting, I found that AI-powered chat was a great way to summarise the discussion and get everyone's thoughts on what we had discussed.

It looks like ChatGPT now has a quick Summarise button for this purpose.

Code reviews#

When reviewing pull requests I've been experimenting with various ways of asking AI help me.

One way I found useful was to have AI review and summarise the changes and overall quality.

Here are the steps I took for doing this with Copilot Chat:

- Generate a diff between the pull request source and destination branches.

git checkout <source-branch>to checkout the branchgit diff <destination-branch> | pbcopyto run the diff and copy the output

-

Paste the whole diff into a new empty tab in VS Code

-

Press Cmd+I and prompt Copilot: "Please review these code changes"

Here's a sample of the kinds of results I tended to get (anonymised):

The code changes introduce several new features and modifications to the healthcare application. Here are the key points:

- Localization Updates:

Added new localization strings for transfer confirmations in

en-AU.json.

- Removed Mock Initialization:

Removed the conditional import of mock initialization in

layout.tsx.

New Patient Transfer Form Submission Logic:

Created a new file

submit.tsto handle the patient transfer form submission logic.This includes creating a

Wardobject, making a patient transfer request, and handling different states of the transfer (success, delay, error, no-response)

- Transfer Form Tests:

- Updated

transfer-form.test.tsxto reflect changes in patient name and ward number and added tests for the new patient transfer form submission logic.Overall, these changes enhance the patient transfer functionality by providing detailed feedback on the transfer status and improving the test coverage for various scenarios.

Not as good as a human reviewer, but not useless either.

And not bad given the tiny effort on my part!

General thoughts#

Code is valuable

I've heard the idea that code is no longer valuable, since AI can now write it.

I disagree. In fact, I think well written code is more valuable with the advent of AI. When working with code through an AI tool, we need the AI to be able to understand the code, reason about it, draw clear and distinct conclusions and make concise edits which we can easily review to identify mistakes. Well written, consistent code enables this.

Even better: if we can state our coding standards explicitly to the AI, using project-specific configuration (such as CLAUDE.md files in Claude Code) and AI "memory" features, then we can improve the effectiveness and quality of the code changes made by the AI.

Psychological hurdle to AI adoption

From introspection (also some observation) the biggest hurdle holding back engineers from fully utilising AI tools is the emotional/psychological adaptation. The technology itself is not especially cognitively demanding. I'd argue the shift to functional programming or the Cloud were as hard or harder than AI. I think the important thing is a willingness to learn and adapt. This mental flexibility can be improved over time, with technique such as making a habit of continuous learning and exploring cognitive biases.

Conclusion#

While I feel AI might have been a little over-hyped, I think there's still merit in becoming familiar with it and using it where it fits.

It seems likely that AI adoption among software engineers will grow, so it will be advantageous to be familiar and conversant with the tooling.

Further reading and viewing#

- Pragmatic techniques to get the most out of GitHub Copilot • Patrick CHANEZON, Burke HOLLAND, Brigit MURTAUGH, Isidor NIKOLIC, Allison WEINS, Martin WOODWARD

- Notes on using LLMs for code • Simon WILSON