TL;DR:AI-enabled capabilities can be surfaced in user interfaces in a variety of ways, including inline suggestions, single and multi-agent chat, providing hints for filling in inputs and through controls that allow the user to nudge a preview until it matches what they want.

As AI capabilities become increasingly available to everyday development teams, it might be useful to explore way to surface these capabilities to end-users.

This article describes some user interface patterns for surfacing AI.

Using "treat it like a person" as a core principle, I provide patterns, examples (including in-the-wild cases) and how they mimic humans.

Principles#

As the definition of "AI" is still somewhat in flux, I want to assume a definition for the purposes of this article:

The capability of software/machines to do things that are normally thought of a human, in a rational way.

The fact AI mimics human thinking/behaviour suggests that we user interfaces should expose AI capabilities in a human way.

As Ethan Mollick puts it in the book Co-Intelligence (bold mine):

AI doesn’t act like software, but it does act like a human being. I’m not suggesting that AI systems are sentient like humans, or that they will ever be. Instead, I’m proposing a pragmatic approach: treat AI as if it were human because, in many ways, it behaves like one. This mindset, which echoes my “treat it like a person” principle of AI, can significantly improve your understanding of how and when to use AI in a practical, if not technical, sense.

The patterns I've encountered are:

- Inline suggestion

- Single-agent chat

- Multi-agent chat

- Prompting and nudging controls

Let's look at them in detail...

Pattern: Inline suggestion#

While performing a task, relevant ideas are displayed nearby in text or even imagery.

The user might be invited to provide input, such as selecting between variants or filling in a blank. (See Fill-in-the-blanks pattern)

Example: As a product owner enters a story into a task tracking system, the AI suggests edge cases they didn't yet consider.

In the wild: Code assistance plug-ins in IDEs (such as Genie AI, Amazon Q, CodeGPT, Codeium and Llama Coder) and dedicated IDEs (such as Cursor). Email and text message completion, as seen in Gmail, LinkedIn messaging and Apple Messages.

Mimics: 🧍 Colleague, mentor, friend, etc. sitting nearby verbally offering a suggestion and/or physically pointing to a part of the screen.

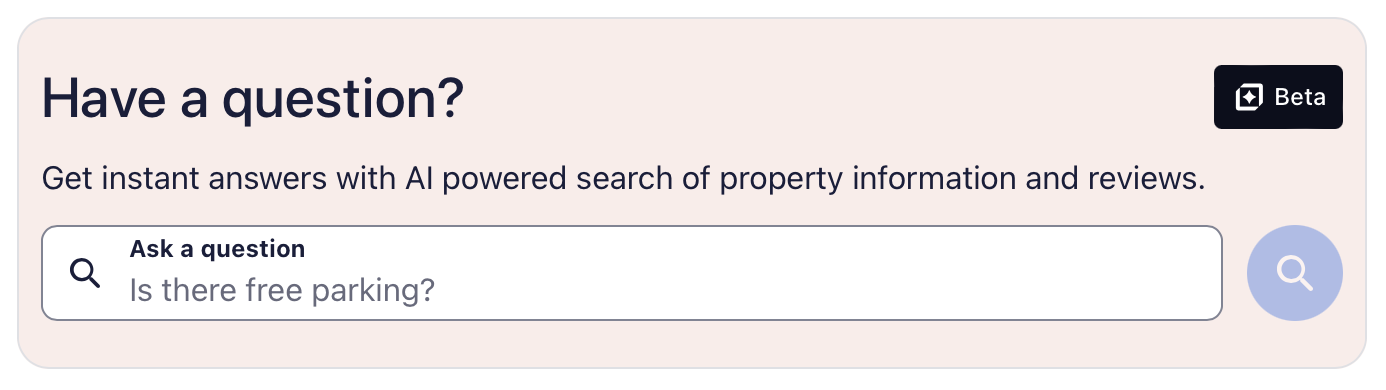

Pattern: Single-agent chat#

A specialised chat bot is available for back-and-forth discussion.

Example:: Customer on an e-commerce website starts entering a question about a product. A specialised chat-bot replies with a detailed response. An input box allows the customer to respond with a follow-up question.

In the wild: "Have a question?" feature on Hotels, "QnaBot" on Amazon.

Mimics: 💬 Colleague, mentor, customer service, etc. communicating with the user via chat.

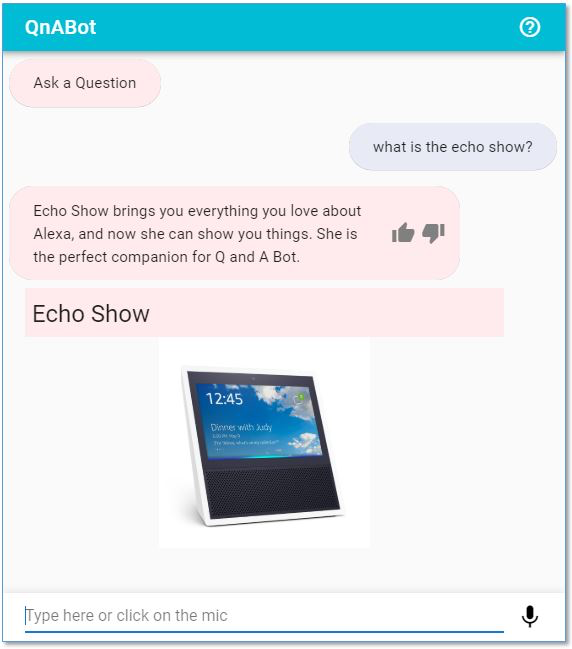

Pattern: Multi-agent chat#

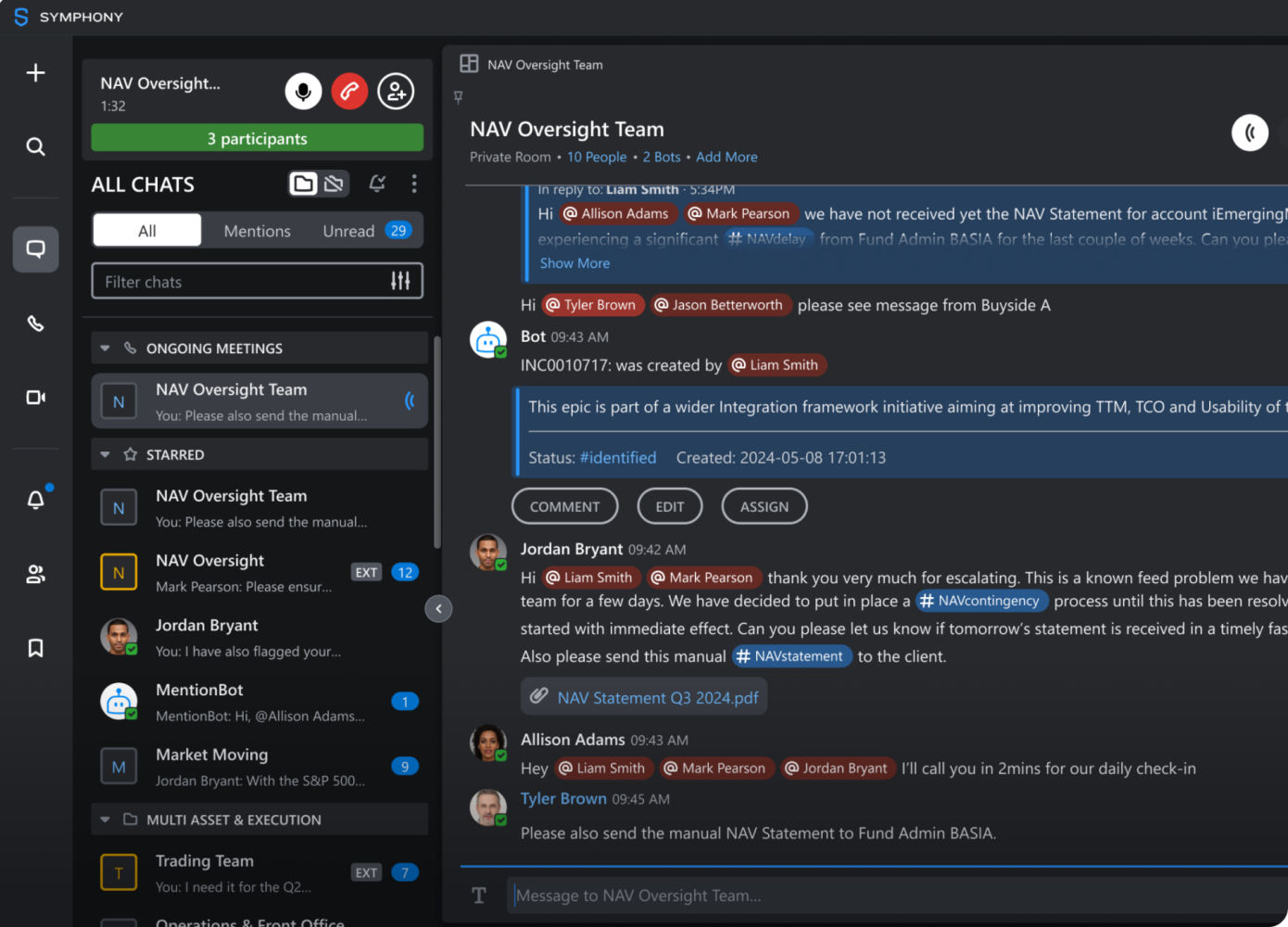

Multiple chat bots appear in the same chat window. Each bot has a different persona and perspective, and only contributes where applicable.

By splitting AI responses among multiple bots, rather than just one, it's easier for the user to mentally divide the AI output into different "buckets".

Also, because this is analagous to real human-human team-work, it's intuitive for people.

Users can address individual bots by name, to ask for further assistance on a specific topic or aspect covered by just that bot.

Example: Financial advisor tool for recommending products to customers. Agents representing analysts and compliance each offer a perspective. The advisor uses these insights to prepare for a meeting with the client.

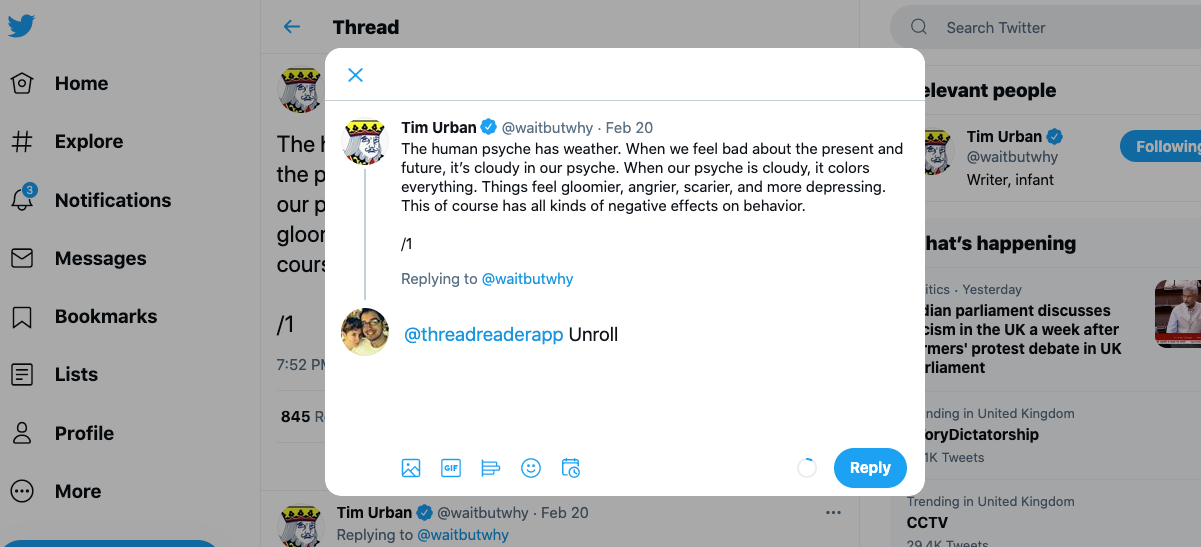

In the wild: Slack automation bots such as Trello for Slack, ThreadReaderApp on Twitter and dedicated chat platforms such as Symphony.

Mimics: 👭 Group of people working together, such as a team meeting.

Pattern: Fill-in-the-blanks#

A stencil is displayed, with some areas for user input and some ares for AI generated content.

As the user fills in the inputs, the AI uses contextual information to generate more of the content. User and AI both work together until the full output has been generated.

Example: Writing a CV for a job. You start to fill in work history items. The AI suggests additional bullet points, which you accept or refuse. The AI suggests shorter more focussed descriptions and word removal, which you accept or refuse.

Mimics: 📈 Collaborative white-boarding with colleagues (virtually or physically), collaborative card sorting exercises with a team.

Pattern: Nudging controls#

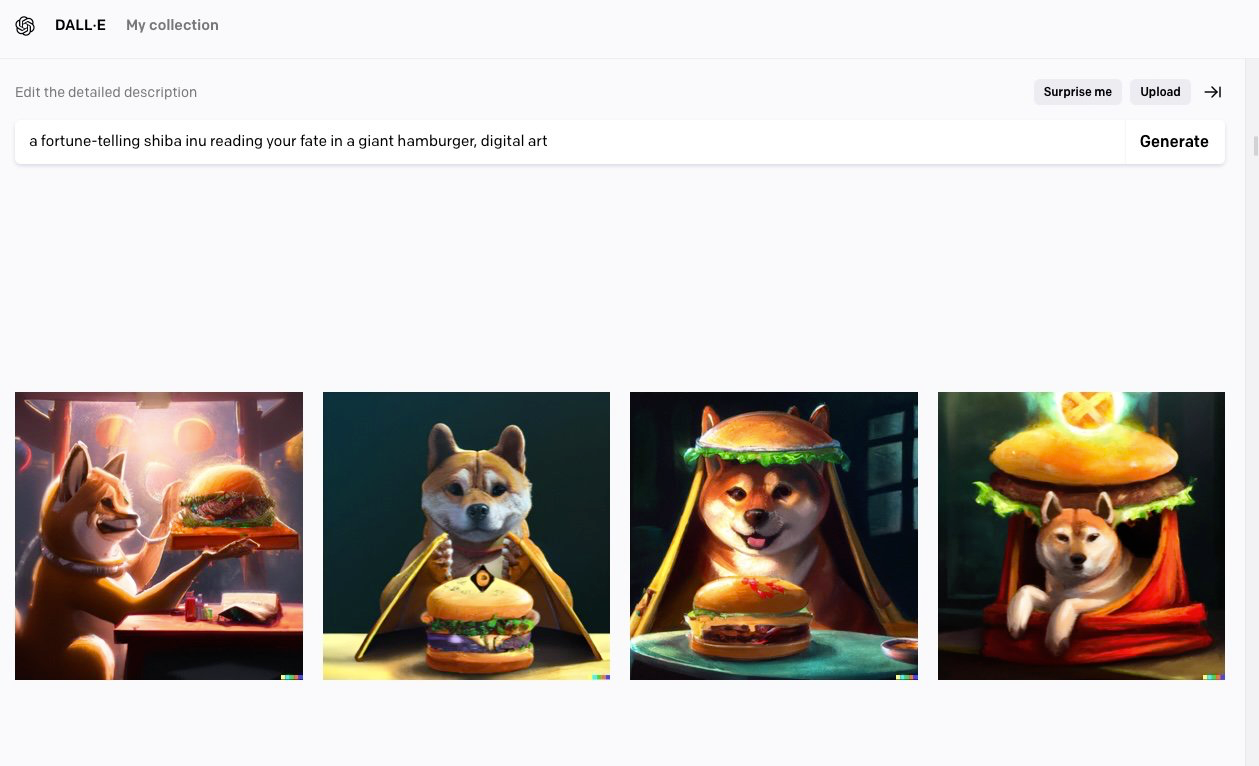

A "work in progress" is displayed in the center while command-buttons for "nudging" are displayed around the edges or off to the side. By clicking the buttons, you can ask the AI to change the work along some dimension.

Example: 3D image manipulation. We ask the AI to make the shape more or less rounded, more or less flat, etc.

In the wild: Dall-E image generator.

Mimics: 💺 Pairing with a designer, where the designer is tweaking this or that based on your input.

Further reading#

- Exploring Generative AI • Birgitta BÖCKELER

- Artificial Intelligence: A Modern Approach • Stuart RUSSELL, Peter NORVIG

- Co-Intelligence • Ethan MOLLICK

- Living with AI Discombobulation • Clay SHIRKY